Blog

In 1984, fast food hamburger chain Wendy’s launched an advertising campaign comparing Wendy’s hamburgers to the competition. It starred three elderly ladies. Two of the ladies in the ad were deep in analysis over the size and quality of the bun, but it was Clara Peller who asked the question that took off in pop culture — “Where’s the Beef?”

In its simplicity, the Wendy’s ad captured two of the most fundamental concepts of data analytics, the quest for value (the beef), and the oft recurring problem in our field of focusing on the wrong analysis (the bun).

In 2010 I moved to Texas and, in keeping with the beef inspired theme of this article, I discovered the possibilities of what a slow smoked brisket over a hickory fire could become. I also discovered that achieving such divine perfection was not a matter of luck, but a result of well applied skills deeply felt by the practitioner.

As evidence, I offer ANSC 117. It sounds like some new text or SQL standard that integrates effectively with Python code but, in fact, it is a course offered from Texas A&M University in BBQ science. And yes, we do take it that seriously here in Texas.

If I had to draw a parallel between the study of BBQ and analytics, it would address two main ideas: analytical coherence and analytical cognitivism. While seemingly abstract, they address this question of value in a broader context. The A&M program, for examples, features an article titled “BBQ Science” that explores the following:

· Ever wonder why foods brown during cooking?

· Ever wonder why the smoke ring appears on barbecue?

· Ever wonder why some cuts require higher internal temperatures before they are tender?

In my eyes, these questions are no different than ones I would consider in the field of data & analytics. They seek to understand, explain and leverage desired outcomes.

Asking what level of browning is desired in BBQ is not a question addressed by the data of our experiences about browning, but a question of philosophy and preference. There is no singularly accurate answer to this question. It is interpretative and it requires a knowledge and understanding of our target audience’s taste and preferences. Asking why a food browns is a question of science and causality. It is also not a question of data, but of fact. The Maillard Reaction, where amino acids in foods react with reducing sugars is what is responsible for browning.[1] Understanding this question of scientific fact is a significant component of the overall framework designed to achieve the desired outcome. Asking the question of how long a cooking time produces the desired browning in any given meat is a question of data analytics answered by the data transactions recorded in our prior experiences.

Interestingly, in the example above, the first two questions in understanding and achieving the outcome are not data analytics questions while the third is. All are equally important though and this observation begs the question of whether our approach in any given analytics endeavor is fully formed? Are we asking the right questions of our data within a proper framework that understands the desired outcomes, incorporates applicable science and fact, and pursues outcomes that are relevant and analysis worthy? I would offer that we call this analytical coherence, a formation of analytical thought and problem solving that addresses a unified whole solution to the desired outcome.

Next, consider the question of tenderness — aka that fall off the bone, cut with a spoon and melt in your mouth — quality of perfectly cooked brisket or ribs. Dr. Greg Blonder, an M.I.T. and Harvard graduate and former chief technical advisor at AT&Ts Bell Labs solved the haunting problem in BBQ science known as “the stall,” a concept in slow smoking when internal temperatures of the meat seem to plateau and stop climbing dead in their tracks.

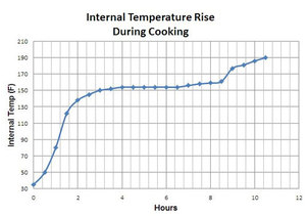

In the interest of brevity, I will not go into detail here but suffice it to say that smoking brisket, pork shoulder or ribs is a nail-biting experience that can go on for ten to fourteen hours. It is a balancing act of navigating a slow rise in internal temperatures that get hot enough to soften the collagen of connective tissues of the meat, while at the same time fall short of toughening the meat itself. The stall typically occurs a couple hours into the process where temps just seem to flatten and hold for hours. For many, panic sets in. You turn up the heat or move it to the oven and so begins the downward spiral that produces random and unrepeatable outcomes of often bad, or generally just fair and ordinary product.

The stall has been a bona fide mystery that no one could explain until Blonder. Heat produced in the smoker is consumed in several ways. Some escapes, some is used to melt fat, and — as already noted –some is used to convert collagen in connective tissues to gelatin which what makes the meat tender. The remainder drives up the internal temperature of the meat. But how are these processes at work in the lifecycle of the smoke, and why does the ‘stall’ happen?

On the basis that the conversion of collagen was the culprit absorbing the energy during the ‘stall,’ Blonder did some calculations. He showed that the amount of energy needed to convert collagen to gelatin would be far less than what was being consumed during the stall. The numbers were not adding up. There just was not enough collagen in the connective tissues to suck up all the energy and prevent the meat temperature from rising — yet the ‘stall’ was happening — so something else had to be going on.

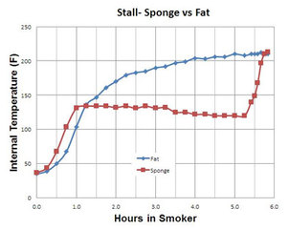

He hypothesized that the stall might be due to heat consumed by evaporative cooling, the same process of how sweat is produced from moisture in the human body to cool it in situations of extreme heat. But he also acknowledged it might consumed be due to fat melting. He took a lump of pure beef fat and put it to a smoker set to 225 degrees. He also soaked a large cellulose sponge in water and added that to the smoker. He inserted a probe in each. Looking at the curve of the internal temp readings that resulted, it became clear. The meat fat containing no water had no stall. The internal temperature rose steadily. The internal sponge temp increased at first like the beef fat but hit a significant stall after the first hour and even declined over the next four. However, after it dried out, internal temps climbed again, and it rapidly caught up to the beef fat. The conclusion was inescapable. The stall is caused by evaporative cooling until the last drop of moisture is gone allowing the heat to be focused on rising the internal meat temperature.

Blonder points out there are multiple features that effect the process. The size, shape and surface texture of the meat can influence the stall by influencing how much moisture is available for evaporation. Airflow and humidity in the cooker also play a role. Draft in your smoker will impact the process. Pellet smokers have fans that create convection and that speeds evaporation creating a shorter stall.[2] The newfound understanding of how the stall works offered invaluable insight as how to reproduce the process in future BBQ exploits. I might even argue it was a paradigm shift in which prior theories about certain process around the smoke were discarded and new paradigm started shifting beliefs and practices in the pit master’s community at large.

So how does this relate to data analytics? Consider BBQ smoke as an analogy for a long running data engineering script, incorporating multiple variables that transition data through various states to its final landing spot. Further, consider BBQ as a business enterprise and smoking a brisket or pork shoulder as one of the more complex processes within that enterprise that transforms raw product for consumption.

We still begin with the questions of what, why and how that drive to the concept of analytical coherence already discussed. But now we consider the more tactical questions of raw material, features of that raw material, natural and manufactured processes algorithms and mathematics that involve quantitative metrics of time, energy, and heat, all in pursuit of our desired outcomes.

As technologists in data analytics, this analogy in not really anything groundbreaking in that we understand concepts of raw data integrity and completeness. We understand transformation algorithms to modify the data in pursuit of the outcome are often a combination of art and science. We realize that proper technology selection is critical. It must handle the data volumes and processing we need to throw at it to be ready in time for when guests arrive.

We understand scripts gone wrong and the impact of final analytical outputs that fall flat like a dried out tough piece of brisket… No one wants to consume it. And even if we get it right, can we repeat the outcomes once we achieve what was desired? Do we understand it well enough to address variant conditions the next time around? Is our process objectively driven by a proven method that allows us to do so? This is the idea of analytical cognitivism — the unbiased processes of applying perception, memory, judgement, and reasoning to the process — in pursuit of meeting the achieved outcomes. It rejects emotional and arbitrary processes.

Analytical coherence and analytical cognitivism work together to suggest a framework for addressing business analytics challenges. Coherence being the part of the theory that frames the “what,” “why,” and “how” questions while cognitivism sets the stage for process. Its intent is to ensure a sort of symbiosis in which a fully capable unbiased process is driven by a fully formed value driven agenda and it is a data analytics framework perfectly epitomized in a well smoked Texas brisket.

It is from this perspective that I encourage you to consider how a given analytics initiative and fully formed framework mirror that of a smoked brisket. What value is there in barbequing, if no one wants to eat what comes from it? Food for thought.

The Systech Solutions, Inc. Blog Series is designed to showcase ongoing innovations in the data and analytics space. If you have any suggestions for an upcoming article, or would like to volunteer to be interviewed, please contact Olivia Klayman at oliviak@systechusa.com

Recent Blogs

The AI Imperative: Transforming Document Intelligence for Regulated Industries

Explore how Systech’s DocPro AI achieves 85% accuracy, automation, and compliance—transforming document workflows with AI-driven intelligence for seamless efficiency.

Profisee vs. Reltio: Choosing the Right Master Data Management Solution

Master Data Management (MDM) is crucial for businesses looking to maintain accurate, consistent, and accessible master data.

Revolutionizing Business Analysis: Meet Wizard™ Core AI

Businesses relying on static dashboards and manual reporting are losing millions in unrealized opportunities and inefficiencies. In today’s AI-driven economy, agility in decision-making isn’t an advantage—it’s a requirement.